THUS SPOKE MY OLD MAN !

Welcome to the Idle Words Said in Passing!

Reflections on the Mysterious Beginnings and the Still Mysterious Present of Quantum Mechanics:

Quantum Mechanics - Where reality unfolds through uncertainty, probability, and profound possibility!

Analysis and Observations by Jan Vade

A PERSONAL JOURNEY INTO A QUANTUM FIELD

When I first approached and looked at Quantum Mechanics I ran into a brick wall, classical brick wall, that is! There was much confusion in my mind. I voiced these perplexities and uncertainties to a young man, one Anthony Vade and this is what he said:

"Are you still confused by quantum theory... of course, you are, it is confusing by its innate complexity! It is understandable for one to be confused, even the Quantum Researchers and other scientists are. Many of them cannot fully explain where they are in their understanding or where they hope their exploration will lead them... and that's OK too, however, it doesn't stop these concepts from being worth exploring and exploiting, even for the lay people who might not immediately see or comprehend how Quantum Theory relates to their practical everyday world. So put down your academic caps and let's have some "real talk" about Quantum Theory and how it can be understood by people who do not dwell in esoteric discussion circles and / or libraries".

At the time I felt that to a casual observer, particularly one well-versed in philosophy, fine art, and classical studies, the emerging realities of quantum physics and advanced mathematics may appear remarkably unfamiliar and challenging to reconcile with traditional logic and reasoning.

From a superficial perspective and without rigorous examination, one might understandably perceive the reasoning employed by these researchers as fundamentally inconsistent with the principles traditionally upheld in classical sciences, perhaps even bordering on irrational. It occurred to me that these researchers appear to readily embrace the concept of superposition while seemingly overlooking the fundamental principles of energy conservation.

However, after carefully examining our everyday observable reality, I have come to appreciate that the achievements of quantum physics are not only profoundly remarkable, but also very practical. Consider the following examples:

+ Semiconductors & Transistors: Quantum mechanics governs electron behaviour in semiconductors, forming the foundation of modern electronics, including computers and smartphones.

+ Lasers & LED Technology: Quantum principles facilitate the development of lasers used in medical treatments, barcode scanners, fibre-optic communication, and energy-efficient LED lighting.

+ Magnetic Resonance Imaging (MRI): The principles of quantum mechanics underpin MRI technology, enabling detailed imaging of internal body structures for precise medical diagnostics.

+ Solar Cells & Photoelectric Effect: Quantum mechanics explains the conversion of sunlight into electricity in solar panels, driving progress in renewable energy technologies.

+ Global Positioning System (GPS): GPS technology is reliant on quantum physics, particularly atomic clocks, for precise location tracking. Future advancements in quantum communication may further enhance synchronization accuracy, potentially improving location precision to within fifteen centimetres.

These advancements illustrate how quantum mechanics has revolutionized various technological domains, enhancing efficiency and precision in many practical applications.

In this frame of mind, full of doubts and humility, and full of wonder, I embarked on a study of Quantum Theory. Whilst not intending to become an expert or an engineer, I studied for the purpose of my own enlightenment and better understanding of this science. I must give thanks to my Old Man for his help in discussing it all. My Old Man is quite adept in merging the old and the new, looking at things differently.

… and, if you are interested, dear reader, let me show you what I have learned:

WHERE IT ALL BEGUN …

Some one hundred years ago, a young physicist of 23 years, Werner Heisenberg , travelled to the remote and windswept island of Helgoland in the North Sea. His primary intent was to find relief from his hay fever, as the island's harsh climate leaves it nearly devoid of vegetation. During this time, in his solitude, he formulated a series of calculations that would ultimately lay the foundation for the development of quantum mechanics. This year, a hundred years later, individuals across the globe, including those on Helgoland itself, will gather together to commemorate the impact and extraordinary journey of this groundbreaking scientific discovery. Quantum theory possesses remarkable depth and complexity, and while it is often renowned, perhaps primarily, for its inherent strangeness, it is equally important to acknowledge its enduring elegance and its mysteries. This article offers an exploration of these aspects, inviting reflection on the significance of quantum mechanics.

To begin, let us revisits the origins of quantum theory and pay a tribute to some of its lesser-known pioneers. Since its inception, quantum theory has profoundly influenced numerous fields, a transformation that will continue as quantum computing advances. We shall explore further in this article.

At the same time, fundamental questions persist regarding the true implications of quantum theory. It suggests that the deepest layers of reality operate in ways that challenge conventional understanding. However, emerging experimental research may finally provide insight into what is arguably the most significant scientific theory of the past century.

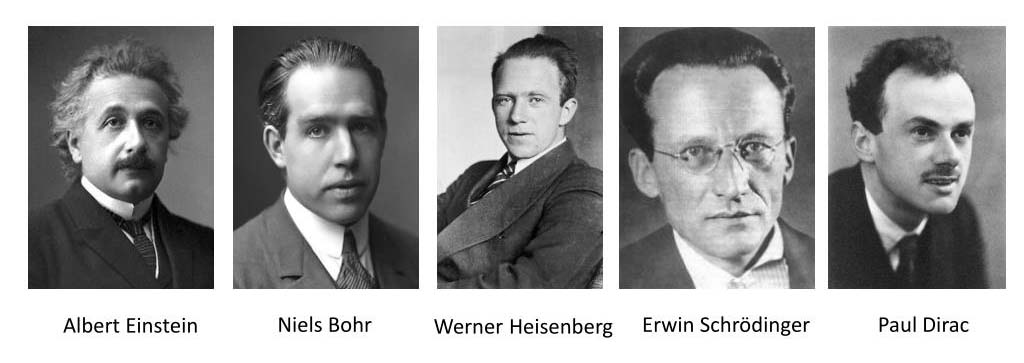

QUANTUM THEORY'S PIONEERS

It is a widely held view that discussions of the origins of quantum mechanics frequently overlook one of its central contributors, leading to ongoing misunderstandings regarding the theory's interpretation.

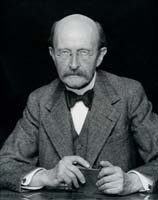

It has been suggested that the narrative surrounding the birth of quantum mechanics is frequently told, though not always accurately. Introductory quantum physics courses often focus on the iconic equation formulated by Erwin Schrödinger in 1926, which describes quantum waves. It can be understood that this emphasis on quantum waves has contributed to a persistent confusion that endures to this day. The true emergence of quantum theory occurred a year earlier, primarily through the work of Max Born and his collaborators. This point can be highlighted not only to afford Born the credit he is due but also because the overemphasis on Schrödinger's waves has contributed significantly to contemporary misconceptions about what quantum phenomena reveal regarding the nature of reality

To begin at the outset: It is frequently stated that quantum physics arrived unexpectedly at a time when physicists believed they had

already comprehended all the fundamental laws of nature. However, such a belief was never accurate. By the close of the 19th century,

physicists were grappling with numerous unresolved questions concerning the most fundamental aspects of the physical world.

-------------------------------

Explanatory notes:

Werner Heisenberg (1901-1976), a German theoretical physicist, revolutionized quantum mechanics with his development of matrix mechanics and the formulation of the uncertainty principle. Awarded the 1932 Nobel Prize in Physics, Heisenberg's work laid the foundation for modern quantum theory, influencing both science and philosophy.

Erwin Rudolf Josef Alexander Schrödinger (12 August 1887 - 4 January 1961), occasionally rendered as Schroedinger o Schrodinger, was a distinguished Austrian-Irish theoretical physicist whose pioneering contributions significantly advanced quantum theory. He is particularly renowned for formulating the Schrödinger equation, a fundamental equation that enables the calculation of a system's wave function and its dynamic evolution over time. Furthermore, in 1935, Schrödinger introduced the term "quantum entanglement".

Max Karl Ernst Ludwig Planck (23 April 1858 - 4 October 1947) was a German theoretical physicist whose discovery of energy quanta won him the Nobel Prize in Physics in 1918. He is known for the Planck constant, which is of foundational importance for quantum physics, and which he used to derive a set of units, today called Planck units, expressed only in terms of fundamental physical constants.

-------------------------------------------

This is why nobody paid much attention when, in October 1900, Max Planck came up with a simple but "unjustified" equation in trying to make sense of certain obscure experimental measurements of the electromagnetic radiation inside hot cavities. ("Carlo Rovelli on what we get wrong about the origins of quantum theory")

The Planck constant (?) is a fundamental physical constant that plays a crucial role in quantum mechanics. ("Planck Energy - Definition & Detailed Explanation - Sentinel Mission") It establishes a direct relationship between a photon's energy and its frequency, meaning that the energy of light is quantized rather than continuous. Additionally, in wave mechanics, the wavelength of a matter wave is determined by dividing the Planck constant by the momentum of the associated particle, reinforcing its significance in describing wave-particle duality. A closely related quantity, the reduced Planck constant (?). This reduced constant appears frequently in quantum physics equations, especially in formulations of wave functions and angular momentum.

Historically, Planck originally introduced this constant to explain black-body radiation, which classical physics failed to describe accurately. Later, Einstein expanded upon Planck's concept, associating the quantisation of energy directly with electromagnetic waves. This basis principle paved the way for quantum mechanics, influencing various aspects of physics, from atomic structure to statistical mechanics. The Planck constant is now a key element in modern physics and even plays a role in defining the kilogram in the International System of Units (SI).

"This constant, we now know, sets the scale of quantum phenomena." ("Quantum theory's unsung hero - ScienceDirect")

Albert Einstein saw what this equation could mean: light is made of particles, or "quanta of light", each having energy E=hv. This contradicted with what was considered empirically established view at the time: that light is a wave. Raising a suggestion like this, so contrary to established view, was not taken seriously by the academic community. Although, Einstein became instantly famous for his work on relativity, his "quanta of light" were considered outlandish. "But his quanta predicted a physical effect that turned out to be real, and earned him his Nobel prize." ("Quantum theory's unsung hero - ScienceDirect")

Einstein's paper on the subject opens with the words: "It seems to me that [numerous] observations... are more readily understood if one assumes that the energy of light is discontinuously distributed in space. ("Carlo Rovelli on what we get wrong about the origins of quantum theory") Note the wonderful starting words, "It seems to me". Ordinary people have certainties, but not so Einstein, always open minded.

The progression of quantum theory was significantly influenced by the pioneering work of Niels Bohr in Denmark. Bohr focused on the atomic structure, particularly the emission of light at distinct frequencies, which could be precisely measured in laboratory settings. He recognized that these specific frequencies could be explained if electrons orbited the atomic nucleus in discrete, "quantised" trajectories. Similar to Einstein's concept of light quanta, these electron orbits could only possess particular, quantised energy levels. Electrons would then transition between these orbits, emitting. discrete packets of light energy-phenomena now famously known as "quantum jumps."

Initially, many physicists regarded this notion as implausible, likening it to mysticism. However, Bohr's theoretical framework proved remarkably effective, enabling accurate predictions of the frequencies of emitted light. This marked a significant step toward unravelling the mysteries of atomic behaviour. Bohr soon gained widespread recognition and established an institute in Copenhagen, which became a hub for the brightest minds of the younger generation, all striving to deepen their understanding of atomic physics. Among these scholars was Werner Heisenberg. In the summer of 1925, inspired by Bohr's ideas and seeking respite from a severe bout of hay fever, the 23-year-old Heisenberg retreated to the isolated, windswept island of Helgoland in the North Sea.

During several days of intense and solitary calculations, Heisenberg, amidst a flurry of ideas, devised a ground-breaking mathematical formulation that would fundamentally alter the trajectory of scientific thought. He approached the electron's position not as a singular variable but rather as a matrix, laying the foundation for what would become matrix mechanics-one of the cornerstones of modern quantum mechanics.

Following an intense period of complex calculations, interwoven with moments of conceptual confusion, Heisenberg devised a groundbreaking formulation that would fundamentally alter the path of scientific understanding. He redefined the representation of an electron's position, not as a singular variable, but rather as a matrix of numerical values, with rows and columns corresponding to the initial and final states of a quantum transition.

Upon returning to his home institution, the University of Göttingen in Germany, Werner Heisenberg presented the results of his recent calculations to his academic advisor, Max Born. Upon examining Heisenberg's intricate mathematical work, Born identified a vitally important conceptual advancement in the emerging framework of quantum physics: physical quantities could no longer be represented merely as classical variables. Instead, they required description through more sophisticated mathematical objects, specifically, non-commuting operators. In this context, "non-commuting" refers to the fact that the product of two such quantities depends on the order in which they are multiplied.

Born deduced from Heisenberg's formulation that the position (X) and momentum (P) of an electron must satisfy the fundamental commutation relation:

In this equation, h denotes Planck's constant, introduced a quarter of a century earlier, and i is the imaginary unit, defined as the square root of -1.

In this equation, h denotes Planck's constant, introduced a quarter of a century earlier, and i is the imaginary unit, defined as the square root of -1.

This expression lies at the very foundation of quantum mechanics, establishing the principle that the precise simultaneous determination of a particle's position and momentum is fundamentally impossible. The sequence of measurements, whether position precedes momentum or vice versa, yields different results, signifying the inherent uncertainty of quantum observables.

Recognizing the significance of this development, Born submitted Heisenberg's manuscript for publication under Heisenberg's name (see explanatory note below). Subsequently, with the assistance of Pascual Jordan , a young mathematician in his team, Born co-authored a seminal paper that laid the foundations of quantum mechanics. Both generously attributing the entire achievement to Heisenberg . Although much refinement and numerous practical applications of the theory would follow in 1925, the essential framework of quantum theory had already been articulated in the collaborative work of Born, Jordan, and Heisenberg.

In retrospect, it can be argued, Max Born merits primary recognition for the formal discovery of quantum mechanics among the many contributors to this scientific breakthrough. Max Born not only coined the term quantum mechanics, but also discerned and formalized the foundational commutation relation that underpins the theory.

Despite his critical role, Born remains one of the under-appreciated architects of modern physics.

------------------------

Explanatory notes:

Ernst Pascual Jordan 1902 - 1980) was a German theoretical and mathematical physicist who made significant contributions to quantum mechanics and quantum field theory. He contributed much to the mathematical form of matrix mechanics, and developed canonical anticommutation relations for fermions. He introduced Jordan algebras in an effort to formalize quantum field theory; the algebras have since found numerous applications within mathematics. Unfortunately, Jordan joined the Nazi Party in 1933, but did not follow the Deutsche Physik movement, which at the time rejected quantum physics developed by Albert Einstein and other Jewish physicists. After the Second World War, he entered politics for the conservative party CDU and served as a member of parliament from 1957 to 1961.

Giving full credit to Heisenberg - An example of generous behaviour: so uncommon in 21st century academic milieu of "publish or perish".

Wolfgang Ernst Pauli (1900 -1958) was an Austrian-American-Swiss theoretical physicist known for his work on spin theory, and for the discovery of the "Pauli exclusion principle", which is important for the structure of matter and the whole of chemistry. He was given the Nobel Prize in Physics in 1945.

----------------------------------

A few months later, Wolfgang Pauli demonstrated that the new theory could also account for the intensities of light, in addition to the frequencies, deriving them from first principles.

In a letter to his long-time friend Michele Besso, Albert Einstein remarked on the significance of these developments, referring to the Heisenberg-Born-Jordan formulation of quantum states as: "The most interesting theorisation of recent times is that of Heisenberg-Born-Jordan on quantum states: a calculation of real witchery."

In a letter to his long-time friend Michele Besso, Albert Einstein remarked on the significance of these developments, referring to the Heisenberg-Born-Jordan formulation of quantum states as: "The most interesting theorisation of recent times is that of Heisenberg-Born-Jordan on quantum states: a calculation of real witchery."

Niels Bohr, often regarded as the elder statesman of quantum theory, would later reflect on that pivotal period with characteristic modesty and admiration: "At the time, we had only a vague hope of arriving at a reformulation of the theory in which every inappropriate use of classical ideas would gradually be eliminated. "Daunted by the difficulty of such a programme, we all felt great admiration for Heisenberg when, at just twenty-three, he managed it in one swoop." ("Quantum theory's unsung hero - ScienceDirect")

While this achievement is rightly attributed to Werner Heisenberg, it is important to acknowledge that he did not work in isolation; he was supported and influenced by his contemporaries and mentors. Nonetheless, this was far from the final chapter in the unfolding narrative of quantum mechanics.

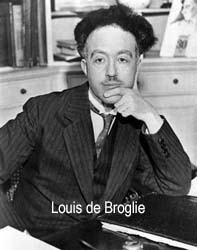

However, new complexities soon emerged. Erwin Schrödinger arrived at results equivalent to those of Wolfgang Pauli, yet he employed a fundamentally different conceptual route. Schrödinger's insights were not built in a traditional academic setting; according to popular accounts, he developed his ideas while on a retreat in Swiss Alps.

Schrödinger's formulation drew upon the earlier doctoral work of Louis de Broglie , a young physicist whose thesis, highlighted to Schrödinger by Albert Einstein, proposed the then-radical notion that electrons, traditionally regarded. as discrete particles, might also exhibit wave-like properties, akin to Einstein's light quanta. Intrigued, Schrödinger sought to determine the mathematical equation that would govern such wave-like behaviour.

Schrödinger's formulation drew upon the earlier doctoral work of Louis de Broglie , a young physicist whose thesis, highlighted to Schrödinger by Albert Einstein, proposed the then-radical notion that electrons, traditionally regarded. as discrete particles, might also exhibit wave-like properties, akin to Einstein's light quanta. Intrigued, Schrödinger sought to determine the mathematical equation that would govern such wave-like behaviour.

The notion that an electron is simply a wave disrupted the Göttingen group and their complex theories involving non-commuting quantities. It seemed Heisenberg, Born, Jordan, and Dirac had taken a convoluted path, while Schrödinger offered a clearer, more intuitive approach. Waves are easy to visualize-Schrödinger appeared to have won.

THE ILLUSION OF CLARITY

Schrödinger's apparent triumph was short-lived. Heisenberg soon realized that the seeming clarity of wave mechanics was illusory. Unlike waves, which are inherently spread out, electrons manifest at specific, localized points upon detection.

This fundamental inconsistency reignited debate, which quickly turned contentious. Heisenberg was particularly critical: "The more I reflect on the physical implications of Schrödinger's theory, the more repugnant I find it. When he admits that the visualisability of his theory may not be entirely accurate, it amounts to saying it is nonsensical." Schrödinger replied with characteristic irony: "I cannot imagine an electron leaping about, here and there, like a flea."

In retrospect, Heisenberg's critique proved justified. Wave mechanics offered no greater clarity than the abstract, non-commutative formalism developed in Göttingen.

Years later, Schrödinger, who evolved into one of the most insightful commentators on the peculiar nature of quantum phenomena, acknowledged this limitation: "There was a time," he wrote, "when the pioneers of wave mechanics [myself included] believed we had eliminated the discontinuities of quantum theory. But the discontinuities removed from the equations return as soon as the theory is confronted with observation."

Max Born was belatedly awarded the Nobel Prize in 1954, and even then solely for his "statistical interpretation of the wave function." ("Max Born, Physics (1882 to 1970) - Universität Göttingen") This recognition came surprisingly late, considering his foundational contributions to quantum mechanics in 1925.

Born had already articulated the core structure of the theory, formulated in the established commutation relation  and had developed the statistical interpretation even before Schrödinger introduced his wave mechanics. One possible reason for this delay may lie in his collaboration with Pascual Jordan, a key co-author of the foundational quantum mechanics papers. Jordan's later affiliation with the Nazi regime could have complicated post-war recognition, particularly in the context of the Nobel Prize

and had developed the statistical interpretation even before Schrödinger introduced his wave mechanics. One possible reason for this delay may lie in his collaboration with Pascual Jordan, a key co-author of the foundational quantum mechanics papers. Jordan's later affiliation with the Nazi regime could have complicated post-war recognition, particularly in the context of the Nobel Prize

The nature of quantum phenomena and their implications for our understanding of reality remain subjects of ongoing debate. Multiple interpretations coexist. My Old man views Schrödinger's wave function not as a direct representation of physical reality, but as a mathematical expression of the information that one system has about another. This perspective aligns with what is known as the "relational" interpretation, which emphasizes the relational nature of quantum states, describing only how systems affect each other, rather than their intrinsic properties in isolation. Other interpretations, such as QBism, see quantum states as encoding the observer's knowledge of a system.

From this standpoint, my Old Man believes Schrödinger's wave mechanics, rather than illuminating the essence of quantum theory, may have obscured the conceptual clarity achieved by the Göttingen school and by Dirac. It encouraged the mistaken view that quantum theory was about enigmatic waves or mystical "quantum states," rather than a clear framework for predicting probabilistic interactions between systems.

From this standpoint, my Old Man believes Schrödinger's wave mechanics, rather than illuminating the essence of quantum theory, may have obscured the conceptual clarity achieved by the Göttingen school and by Dirac. It encouraged the mistaken view that quantum theory was about enigmatic waves or mystical "quantum states," rather than a clear framework for predicting probabilistic interactions between systems.

To many, including my Old Man, quantum phenomena reveal a world that is fundamentally probabilistic and discrete at scales determined by the Planck constant. Reality, in this view, consists of the interactions / manifestations of physical systems with one another. This notion is captured in the words of Niels Bohr: "In quantum physics, the interaction with the measuring apparatus is an inseparable part of the phenomenon." Today, we might simply replace "measuring apparatus" with "any interacting physical system." Reality, then, is the network of influences that systems exert upon each other, a perspective that, many believe, faithfully reflects the original spirit of quantum mechanics as conceived by Max Born, the scientist who gave the theory its name.

------------------------------------------------------------------------------------------

APPLICATIONS ?

Despite considerable optimism surrounding quantum computing, bolstered by notable hardware advancements and promising future applications, a 2023 Nature spotlight article offered a sobering assessment, characterizing current quantum computers as "for now, [good for] absolutely nothing." The article emphasized that, at present, quantum machines have not demonstrated any clear advantage over classical computers in practical applications. Nevertheless, it acknowledged that quantum computing is likely to yield significant utility in the long term as the technology matures.

--------------------------------------------------------------------------------------------

Unfortunately, quantum physics is often denigrated or maligned. Its description of atomic and subatomic behaviour is frequently labelled as "weird," leading to the proliferation of speculative interpretations, such as the idea that we inhabit a multiverse or that observable reality is merely an illusion. These more sensational notions can obscure a critical truth: quantum physics has had a tangible and profound impact on modern life. For example, every glance at a smartphone relies on technologies rooted in quantum mechanics.

Yet the practical applications of quantum theory extend far beyond current consumer electronics. As our ability to manipulate quantum phenomena improves, a new generation of technologies, engineered to exploit these effects more directly, is poised to reshape both science and society. Among these innovations, quantum teleportation and quantum sensing attract significant attention for their novelty. However, it is quantum computing, perhaps the most familiar of the emerging quantum technologies, which holds the greatest transformative promise.

According to its advocates, quantum computing has the potential to revolutionize numerous fields: it could accelerate pharmaceutical development, enable the discovery of advanced materials, and contribute to strategies for addressing climate change. Nevertheless, despite impressive theoretical and experimental progress, the field remains in flux. Significant engineering challenges persist.

Moreover, amid the rush to overcome these obstacles, it is often overlooked that the very foundation of quantum computing, the exploitation of quantum principles, introduces fundamental uncertainty about what such machines will ultimately be capable of achieving.

Amid the ongoing efforts to surmount the challenges of quantum computing, a crucial point is often overlooked: the very nature of quantum computation makes it inherently difficult to predict what these machines will ultimately be useful for.

Despite the ambitious rhetoric surrounding the field, researchers continue to grapple with a fundamental question: "if we were to develop a fully functional quantum computer tomorrow, what practical applications would it serve?"

The pervasive influence of quantum physics in modern technology is frequently underestimated, largely due to the minuscule scales at which quantum effects operate. Quantum phenomena, such as particles exhibiting wave-like behaviour, are not observed at macroscopic scales. Although everyday objects, like pencils or a piece of fruit are composed of atoms governed by quantum principles, they do not themselves exhibit quantum behaviour in any observable sense. Nevertheless, at the microscopic level, within the components of modern devices, the quantum nature of particles such as electrons plays a critical role.

Having said that, consider the transistor, the foundational element of contemporary electronics. These nanometre-scale semiconductors regulate the flow of electrons in microprocessors. Their functionality depends on precise manipulation of silicon, structured in layers and selectively infused with atoms of other elements. Crucially, this process would be unworkable without an understanding that electrons sometimes behave like waves, a quintessentially quantum property. It is no overstatement to claim that quantum physics, despite its reputation for abstraction and complexity, has profoundly reshaped the modern world.

Without quantum theory, modern technologies such as fibre optics, the internet, GPS and smartphones would not exist. However, physicists have long anticipated a further technological revolution, one in which devices do not merely exploit quantum effects incidentally, but instead rely on them as their fundamental operating principle.

Illustrative of this potential is quantum teleportation, which harnesses the phenomenon of entanglement, where the states of two particles remain correlated irrespective of the distance between them. Researchers have already demonstrated the successful teleportation of information over distances exceeding 100 kilometres through optical fibre and across more than 12,000 kilometres via satellite. Such capabilities could form the foundation of a faster, more secure quantum internet.

Another promising domain is quantum sensing, which holds the potential to enable measurements with unprecedented sensitivity. This advancement could significantly enhance fields such as navigation, geological exploration, and medical diagnostics. Nonetheless, it remains uncertain whether these emerging quantum technologies will expand beyond niche or specialised applications.

Quantum computing, by contrast, may have far broader implications, implications similar to the transformative impact of classical computing in previous decades. Its promise lies in a fundamental distinction between how quantum and classical computers process information. Traditional processors encode data using bits-binary digits represented as 1s and 0s by controlling the flow of electric current.

Quantum computers, however, are built upon entirely different principles. In a quantum processor, information is encoded directly into the quantum properties of particles or atoms themselves. These elements are not merely passive components facilitating computation; rather, they serve as the principal agents of the process.

This fundamental distinction enables quantum computers to process significantly more information (simultaneously) than classical computers. Quantum bits, or qubits, are not limited to binary states of 0 or 1. While a qubit does not encode both values at once in a classical sense, it can exist in a "superposition", a quantum state in which it is, in effect, both and neither until a measurement is made.

Though this concept may seem counterintuitive, and its full implications for our understanding of reality still remain a subject of philosophical and scientific debate, the practical advantages are profound. For example, a quantum system with just 10 qubits can represent all integers from 0 to 1023 simultaneously, a feat that would require 1024 classical bits to match in conventional computing.

THE PROMISE OF QUANTUM COMPUTING

The potential of quantum computing lies in its ability to perform certain calculations that would overwhelm conventional computers. In scenarios where classical machines exhaust their available computational resources, quantum systems may continue operating effectively. This vision has captivated researchers since the 1980s, prompting decades of dedicated effort to construct functional prototypes.

QUANTUM SUPREMACY

These efforts are now beginning to yield results. Today's most advanced quantum processors, some equipped with as many as 1,000 qubits, compared to just 50 a few years ago, are capable of solving select "proof-of-principle" problems that lie beyond the reach of even the most powerful classical supercomputers. This milestone is known as quantum supremacy.

"There have been truly remarkable advances in laboratory capabilities over the past five years," remarks my Old Man. "The bar continues to rise." Nevertheless, many critical conditions must still be met before practical applications can be fully realized.

Despite these hurdles, the pace of progress has been striking. One of the most significant technical challenges is the inherent fragility of quantum states. Qubits are extremely sensitive to their environment, and even minute disturbances-collectively referred to as "noise", can cause them to lose their quantum properties. ("What Are The Remaining Challenges of Quantum Computing? https://thequantuminsider.com.") As a result, quantum computations often accumulate small errors that compromise the reliability of their outcomes. Consequently, the race to develop a viable quantum computer is, in many ways, a race to achieve fault tolerance. Expanding the number of stable, error-corrected qubits will also be crucial, as a quantum computer's computational capacity scales with the number of reliable qubits it contains.

Encouragingly, progress has been made on multiple fronts. Techniques for constructing qubits-ranging from superconducting circuits to arrays of ultra-cold atoms manipulated with lasers-have significantly improved the stability of quantum systems.

Nonetheless, considerable effort and innovation will still be required. Realizing a large-scale quantum computer comprising millions of qubits will demand vast infrastructure and working environment at extremely low temperatures.

A large, a million superconducting qubits, quantum computer would necessitate housing within a large-scale refrigeration system, as such qubits require extremely low temperatures to function error free. Moreover, its control infrastructure would demand thousands of individual wiring connections. Similarly, scaling a quantum computer built from ultracold atoms could involve the use of thousands of lasers. One proposed solution is to interconnect multiple smaller quantum processors to function as a unified system.

However, what may ultimately hinder the realization of truly transformative quantum applications, and what is frequently overlooked in discussions surrounding fault-tolerant quantum computers, is the current uncertainty regarding which types of problems these devices will be best equipped to solve. This uncertainty stems from the nuanced and counterintuitive nature of quantum mechanics, which complicates efforts to fully exploit the potential computational power of quantum systems. While the concept of a qubit existing in a superposition, neither strictly 0 nor 1, suggests the appealing notion of simultaneous parallel computation, the reality is considerably more subtle and operationally complex.

What fundamentally distinguishes a qubit in superposition from a classical bit is that, while a classical bit encodes either 0 or 1 with complete certainty, a qubit may only yield a probabilistic outcome upon measurement, for example, a 30% chance of returning one. Computation in a quantum system involves sequences of transformations to the qubits' states. When those states are in superposition and exhibit correlations (i.e., entanglement), they can enhance computational performance. However, reading out the result requires measuring the qubits, a process that yields a single probabilistic outcome rather than multiple simultaneous results.

This inherent "producing only probabilities" means that quantum computation does not guarantee speed and efficiency for all problems. For instance, determining whether a binary string contains an odd or even number of 1s would take equivalent time on both a classical and a quantum computer. Thus, identifying both the appropriate problem and the optimal quantum algorithm is critical to unlocking the full advantages of quantum computation, an endeavour that remains profoundly challenging.

Fields such as computational complexity theory may offer insight into which problems quantum computers are most likely to solve significantly faster than classical machines. Yet, as my Old Man remarks, the discovery of ground-breaking quantum algorithms remains a rare and unpredictable occurrence: "There is no simple, unified method for constructing quantum algorithms. It is more of an art than a science."

At present, the field is caught in a kind of catch-22. Without larger, less error-prone quantum devices, it remains impossible to experimentally verify the performance of advanced quantum algorithms. Scientists are developing algorithms and proving their theoretical feasibility, but we are not yet able to test them in practice.

Even the most renowned quantum algorithm, the one Peter Shor's 1994 discovered, which could factor large numbers and thereby break widely used encryption schemes is currently beyond the reach of existing quantum hardware, which lacks the requisite scale and error correction. It seems that we still do not have the ability to experiment with algorithms on the real hardware.

What applications and societal impacts can be expected as quantum computers continue to advance? There is reason for cautious optimism. In recent years, several research groups have achieved meaningful progress toward the development of error-corrected quantum computers. Notably, scientists at Google Quantum AI demonstrated that scaling up the number of qubits in their Willow quantum processor can actually lead to a reduction in overall error rates, a critical step toward building large-scale, fault-tolerant quantum machines. If this trend continues, it is plausible that within the next few years, quantum computers will be capable of addressing complex problems in chemistry and materials science with practical, real-world implications-particularly when integrated into a broader hybrid computing ecosystem.

Quantum computers may prove invaluable in determining the properties of molecules that could lead to more efficient catalysts for fuel cells and pharmaceutical applications. In the field of materials science, they hold promise for modelling and designing improved superconductors capable of transmitting electricity minimal loss, potentially eliminating the need for extreme cooling.

These machines also offer significant potential in pharmaceutical research. Already, quantum computers are being employed to perform calculations that help identify optimal binding mechanisms between drugs and biological molecules, as well as to predict the toxicity of prospective compounds. Looking further ahead, some researchers envision deploying artificial intelligence algorithms on quantum computing hardware. While AI does not align as naturally with quantum systems as chemistry does, the viability of this approach remains an open question, with no clear consensus regarding its practicality.

Although many of these advances may remain distant and unknown, progress is already evident. John Preskill of the California Institute of Technology notes that quantum computers have contributed to numerous discoveries that enhance our understanding of fundamental physical phenomena, what he refers to as "discoverinos." These include findings related to how atomic chains develop magnetic properties, simulations of exotic "time crystals" that appear to remain in "perpetual motion", and studies of systems that seem capable of resisting entropy, the universal tendency toward disorder.

---------------------------------

Explanatory notes:

Shor's algorithm is a quantum algorithm for finding the prime factors of an integer. It was developed in 1994 by the American mathematician Peter Shor. It is one of the few known quantum algorithms with compelling potential applications and strong evidence of superpolynomial speedup compared to best known classical (non-quantum) algorithms. On the other hand, factoring numbers of practical significance requires far more qubits than available in the near future. Another concern is that noise in quantum circuits may undermine results, requiring additional qubits for quantum error correction.

John Phillip Preskill is an American theoretical physicist and the Richard P. Feynman Professor of Theoretical Physics at the California Institute of Technology, where he is also the director of the Institute for Quantum Information and Matter.

--------------------------------

The value of quantum computing lies not solely in its immediate applications. The parallel can be drawn to the Laser Interferometer Gravitational-Wave Observatory (LIGO) , which relies on quantum techniques for manipulating light; techniques similar to those used in some quantum computer designs. These innovations significantly increased the frequency of gravitational wave detections. It is argued that the pursuit of a million-qubit quantum computer is likely to yield similarly transformative secondary benefits.

Ultimately, the lack of clarity surrounding which quantum algorithms will prove most effective makes it difficult to forecast the precise societal impact of quantum computing. Yet this uncertainty is not indicative of a lack of potential. According to Brian DeMarco , the situation is akin to asking a computer engineer in the 1970s to predict the development of the smartphone. "What excites me most," he says, "are the breakthroughs we cannot yet imagine."

OBSERVATIONS ON THE MEANING OF QUANTUMNESS

The challenge presented by quantum mechanics and the reason it remains elusive to most of us, even to many physicists, is not that it offers an unfamiliar depiction of reality. It is not inherently difficult to accept that the realm of fundamental particles, a domain entirely beyond our direct experience, might differ radically from the macroscopic world we perceive.

Rather, the difficulty lies in the theory's failure to articulate a clear transition between these two domains.

Quantum mechanics does not provide a coherent account of how the classical world emerges from quantum processes. Consequently, even a century after its foundational principles were established, we still lack a definitive understanding of what this scientific tour de force implies about the nature of reality.

---------------------------------

Explanatory notes:

The Laser Interferometer Gravitational-Wave Observatory (LIGO) is a large-scale physics experiment and observatory designed to detect cosmic gravitational waves and to develop gravitational-wave observations as an astronomical tool.

Brian DeMarco is a physicist and professor of physics at the University of Illinois at Urbana-Champaign. DeMarco is currently conducting experiments in quantum simulation. The DeMarco group carries out fundamental research on one of the frontiers of 21st century science: interacting many-particle quantum mechanics.

Nathaniel David Mermin is a solid-state physicist at Cornell University best known for the eponymous Hohenberg-Mermin-Wagner theorem,

--------------------------------

There is no shortage of interpretive frameworks. However, preference among them is often guided more by philosophical inclination than empirical validation, as most interpretations resist experimental scrutiny. As physicist N. David Mermin once remarked, "New interpretations appear every year. None ever disappear."

IS ANYTHING REAL?

In recent years, however, the landscape has begun to shift. A new formulation of quantum theory has emerged that makes explicit observational predictions, raising hopes for empirical progress. Simultaneously, another approach has gained acceptance for its apparent ability to resolve multiple long-standing quantum puzzles in a unified manner, even though it challenges the very notion of objective reality.

More encouraging still, physicists have started to explore innovative experimental strategies to test these foundational assumptions. By transforming once purely theoretical thought experiments into empirical investigations, researchers may finally begin to constrain the wide field of interpretations and move closer to uncovering what quantum mechanics is truly revealing.

Classical mechanics fails to describe the behaviour of subatomic particles such as electrons and photons adequately. Experimental evidence reveals that these entities exhibit phenomena that defy classical intuition, for example, behaving as both as particles and as waves, and exist in what is known as a "superposition" of multiple possible states simultaneously. It is only upon measurement that they appear to assume definite, classical properties.

This inherent ambiguity is mathematically described by the Schrödinger equation, which introduces the concept of the wave function, a tool that encodes all potential outcomes of a quantum system. The equation enables the calculation of the probabilities that a particle will be observed in a particular state or location when measured. At that moment, the wave function is said to "collapse," but crucially, it cannot predict the exact result of any individual measurement. Prior to observation only probabilistic information is available.

WHAT REMAINS UNKNOWN?

A number of foundational questions remain unresolved. What exactly occurs before a measurement is made? Quantum theory offers no definitive answer. It also fails to clearly define what constitutes a "measurement," nor does it settle whether the wave function, or quantum state, corresponds to a physically real entity. These gaps are remarkable for a theory as successful and widely accepted as quantum mechanics. At their core, these uncertainties converge on a fundamental issue: how does the stable, deterministic classical world, composed of atoms and particles, emerge from the probabilistic and abstract framework of quantum theory? This is known as the measurement problem and is widely regarded as the central unresolved question in quantum mechanics.

The most well-known response to this problem is the Copenhagen Interpretation, named after the city where it was developed. This view asserts that nothing can be said about a particle's properties prior to measurement. The formalism is effective, and thus, as physicist David Mermin famously summarized it, the prevailing attitude became: "Shut up and calculate." However, the Copenhagen interpretation has been contentious since its inception. Albert Einstein notably criticized its probabilistic nature, asserting that "God does not play dice with the universe."

Many physicists continue to regard the Copenhagen interpretation as an unsatisfactory response. ""It's not a serious answer to the question of what is there, in reality," says Roderich Tumulka, a theoretical physicist at the University of Tübingen in Germany." ("The meaning of quantumness - ScienceDirect") "We want statements about the true nature of reality," he said. Furthermore, the interpretation seems to permit the unsettling implication that human observers, conscious beings, are the agents responsible for collapsing the wave function.

My Old Man is among those who advocate for interpretations in which the wave function represents an element of physical reality, existing independently of observation. The most prominent of these alternatives is the many-worlds interpretation, which offers a radically different view of quantum phenomena.

However, there also exists a class of theories known as objective collapse models, which posit that quantum mechanics, as it currently stands, is incomplete. These models suggest that an additional mechanism must be incorporated into the Schrödinger equation to account for the phenomenon of wave function collapse.

A defining distinction between objective collapse theories and the standard interpretation lies in the treatment of this collapse. In contrast to the conventional view, where the wave function appears to collapse instantaneously and inexplicably upon measurement, collapse models propose that this process is a genuine physical phenomenon, embedded within the dynamics of the system itself.

In recent years, collapse models have attracted increasing attention, partly because they offer a coherent explanation for the emergence of classical reality without invoking the role of human observers. According to these models, macroscopic objects such as picture frames or paint brushes do not exhibit quantum superposition because the collapse mechanism operates more effectively as the number of interacting particles increases. In essence, the greater the complexity of the system, the more readily it undergoes collapse.

The precise trigger of this ongoing collapse process remains uncertain. Some models leave the mechanism unspecified, while others speculate that gravity may be responsible. As my Old Man remarked, it is possible that no definitive explanation exists, that collapse may simply be an intrinsic feature of nature. He finds his liking for collapse models for precisely this reason: they venture beyond the boundaries of established quantum theory, into territory that is still largely unexplored and unknown.

What truly distinguishes collapse models, however, is their falsifiability. Unlike many interpretations of quantum mechanics, collapse models yield concrete, testable predictions that diverge from those of standard theory. Specifically, the continuous process of spontaneous collapse is expected to induce slight, random motion, or "jitter", in quantum particles, leading them to emit small amounts of excess energy. Although this signal is predicted to be extremely faint, it should, in principle, be detectable through precise experimental observation.

Over the past decade, Angelo Bassi has collaborated with researchers worldwide on an ambitious experimental program aimed at detecting signals indicative of objective collapse. Much of this work involves repurposing detectors originally designed to search for dark matter or elusive particles such as neutrinos, particularly using highly sensitive instruments located deep underground beneath the Gran Sasso massif in Italy. Preliminary results have begun to emerge. In 2020, for example, a team that included Bassi was able to rule out the simplest version of a model in which gravity is responsible for the collapse of the wave function.

Similar experiments continue, and with each new analysis, researchers gain further constraints on the viability of various collapse models. While the mere possibility of experimentally testing objective collapse represents meaningful progress, obtaining definitive answers remains a slow and incremental process. "So far, we have seen no signal, but this is just the beginning," says Bassi.

-------------------------------------------------------------------------------------------------------------------------------

"The basic tenet of quantum mechanics is that a particle can be in two states at once, like here + there; yet we only see it here OR there. One of the leading explanations of how this can happen seems to be wrong."

Professor Angelo Bassi, University of Trieste, Italy

---------------------------------------------------------------------------------------------------------------------------------

Should a signal consistent with objective collapse be detected, it would represent a significant discovery. However, whether such a result would immediately clarify the foundational implications for quantum theory is uncertain. It would still be necessary to determine the specific environmental factor responsible for inducing collapse in the observed instance.

"It would, in some sense, resolve the measurement problem, if one suspects that quantum theory is incomplete," remarked my Old Man. "Nevertheless," he continued, "it would not directly illuminate what quantum mechanics tells us about the nature of reality. One would still need to interpret the result: to identify what kind of 'noise' in the environment is responsible for collapsing the wave function."

More fundamentally, such a discovery would not explain why the observable properties of quantum systems emerge probabilistically during measurement. The necessity of invoking probability in the first place remains a profound mystery. There is no evident reason why the behaviour of subatomic particles should not be governed by deterministic laws. The fact that they are not calls out for a deeper explanation.

Conventionally, probabilities are interpreted through a frequentist lens: by tallying the outcomes of numerous trials-such as coin tosses-one infers that the likelihood of obtaining heads or tails is approximately 50/50. In a similar vein, repeated measurements of a quantum particle reveal the relative probabilities of it being found in one state or another upon observation. The Bayesian approach, by contrast, conceptualizes probability as a subjective degree of belief, which is continuously revised in light of new information.

Building on this foundation, the central thesis of Quantum Bayesianism (or QBism) is that quantum mechanics itself should be understood in subjective terms. The theory is seen not as a description of objective reality, but as a normative guide for individual agents, offering prescriptions for how they ought to form and update their expectations about the outcomes of future measurements based on prior experience.

"It's a theory for agents to navigate the world," explains Ruediger Schack of Royal Holloway, University of London, who, alongside Chris Fuchs of the University of Massachusetts Boston, helped develop the QBist framework.

The appeal of QBism lies in its capacity to address several longstanding interpretational puzzles in quantum mechanics. It tackles the measurement problem by elevating the role of subjective experience, asserting that the so-called "collapse" of the wave function is nothing more than the agent revising their beliefs upon receiving new data.

---------------------------------

Explanatory notes:

Professor Angelo Bassi, University of Trieste, Italy

The Gran Sasso massif, literally "Great Rock of Italy", is a dramatic mountain group in the central Apennines, located in the Abruzzo region. It's home to Corno Grande, the highest peak in the Apennines at 2,912 meters.

Bayesian probability is an interpretation of the concept of probability, in which, instead of frequency or propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification of a personal belief. It can be seen as an extension of propositional logic that enables reasoning with hypotheses; that is, with propositions whose truth or falsity is unknown.

--------------------------------

QBism addresses the question of how classical reality arises from the quantum domain by proposing that it emerges through our interactions with the world specifically through the continual process of updating our personal beliefs based on those interactions. This perspective even offers a straightforward resolution to a long-standing puzzle in quantum theory: the Wigner's Friend Paradox , a thought experiment introduced in the 1950s by physicist Eugene Wigner. The paradox illustrates a scenario in which two observers, Wigner and a friend who observes him conducting measurements on a quantum system, can arrive at mutually incompatible accounts of reality.

From a QBist standpoint, no paradox arises because measurement outcomes are inherently personal, tied to the individual who experiences them. Consequently, QBism rejects the notion that an entirely objective, observer-independent account of the universe is attainable. But that, according to Ruediger Schack, is precisely its central message, and perhaps quantum mechanics' most profound insight: that reality transcends what can be captured by any third-person perspective. "It's a radically different way of looking at the world," he affirms.

However, not all physicists are persuaded. Angelo Bassi, for example, argues that abandoning the notion of objective reality is too steep a philosophical cost. "The purpose of physics is to describe nature in an objective manner," he asserts.

A further criticism of QBism is that it does not appear to yield any testable predictions that distinguish it from standard quantum mechanics, nor does it offer a clear path toward empirical verification. We can concede that convincing people might come down to exposing the shortcomings of the alternatives.

This, arguably, brings us full circle. If the most promising empirical resolution to the measurement problem still leaves key questions unanswered even in the event of its confirmation, while alternative interpretations capable of addressing those questions resist empirical scrutiny, it remains unclear how best to proceed.

Nevertheless, there may be grounds for cautious optimism. In recent years, some physicists have begun to show that foundational assumptions about the meaning of quantum theory, long considered the domain of philosophy rather than physics, may themselves be amenable to experimental investigation.

This new line of inquiry has been termed experimental metaphysics. Its aim is to clarify the underlying metaphysical assumptions embedded in various interpretations of quantum mechanics and to explore their empirical consequences.

Among these foundational assumptions are - the absoluteness of observed events, meaning that measurement outcomes are the same for all observers; - freedom of choice, the principle that the outcomes of measurements are not predetermined by hidden variables involved in the act of measurement; and - locality, the idea that a free choice made in one location cannot influence the outcome of an experiment performed at a distant location or in the past.

"Individually, these assumptions may be beyond the reach of direct testing," notes physicist Eric Cavalcanti , "but when considered in combination, they can become experimentally accessible." In this way, it may be possible to falsify entire categories of quantum interpretations. Cavalcanti was part of the research team responsible for the most compelling application of this approach to date. In 2020, he and his colleagues conducted an extended version of the Wigner's friend thought experiment using photons and incorporating the phenomenon of quantum entanglement, which correlates particles over large distances. Their findings suggest that, if standard quantum mechanics holds true-for instance, if no evidence emerges for objective collapse, then one of the core assumptions must be relinquished: locality, freedom of choice, or the absoluteness of observed events

---------------------------------

Explanatory notes:

Wigner's friend is a thought experiment in theoretical quantum physics, first published by the Hungarian-American physicist Eugene Wigner in 1961, and further developed by David Deutsch in 1985. The scenario involves an indirect observation of a quantum measurement: An observer observes another observer who performs a quantum measurement on a physical system. The two observers then formulate a statement about the physical system's state after the measurement according to the laws of quantum theory.

Eric Cavalcanti, Associate Professor (ARC Future Fellow), Griffith University

--------------------------------

According to Cavalcanti, this experiment imposed the most stringent constraints on our understanding of physical reality to date. He proposed that if one wishes to preserve the notions of freedom of choice with locality, then the assumption of the absoluteness of observed events must be abandoned, precisely the conclusion advocated by QBism. While it remains premature to declare QBism, or any other interpretation, as definitively correct in capturing the true meaning of quantum mechanics, we can now begin to narrow the field of viable possibilities.

FRAGILE STATES

Cavalcanti now seeks to take this line of inquiry further. In the 2020 experiment, his team employed photon detectors to stand in for Wigner and photons themselves as proxies for Wigner's friend. However, photons are a far cry from the human observers imagined in Wigner's original thought experiment, and most would agree that photons do not qualify as observers in any meaningful sense. The difficulty lies in the extreme fragility of quantum states: even maintaining a molecule composed of a few thousand atoms in a coherent superposition is a great technical challenge, let alone replicating anything resembling the complexity of human cognition.

Nonetheless, Cavalcanti and his collaborators have proposed a bold future direction: performing a similar experiment using an advanced artificial intelligence algorithm running on a large-scale quantum computer. This AI would conduct a simulated quantum measurement in a simulated laboratory environment. Such a setup, he argues, might one day reveal whether the classical concept of objective reality must indeed be relinquished-although that experiment remains a distant goal.

So, after all this, what are the prospects for resolving what quantum mechanics truly tells us about the nature of reality? In many respects, we are no closer to a definitive answer than the early pioneers of the theory, who famously disagreed on its interpretation.

"What we do know for sure is that a certain classical way of looking at the world fails, and we can demonstrate that with mathematical and experimental certainty as much as we can know anything in science," says Cavalcanti." ("The meaning of Quantumness - ScienceDirect")

At present, one must make one's own judgments about which interpretation of quantum mechanics one finds most compelling, guided primarily by theoretical considerations. This often involves deciding which assumptions one is willing to relinquish, and what compromises one is prepared to accept in order to preserve the principles believed most fundamental.

One can speculate that further insight may come from attempts to reconcile quantum mechanics with Einstein's general theory of relativity, which conceptualizes gravity as the curvature of space-time caused by mass. Should a particular interpretation of quantum theory facilitate progress in this area, it would provide a compelling indication of its validity.

It is suggested that foundational experiments may play a crucial role here. The question of whether events are absolute is central to constructing a viable theory of quantum gravity.

In the meantime, meaningful progress has been made by framing the conceptual challenges of quantum mechanics in accessible terms and designing experiments capable of narrowing the field of plausible interpretations. The objective now is to continue refining these efforts and building our understanding through research.

In conclusion, while this exploration of Quantum Mechanics has opened new vistas to many of its strange principles and paradoxes, it has also underscored the inherent complexity and mystery at its core. Like navigating a strange maze, the journey leaves us both more informed and, in some ways, more bewildered. Yet this duality, of being both lost and found, is probably, part of the quantum experience itself. Maybe that is the point: understanding will come in its own time, not unlike wisdom passed down from those who've pondered these questions before us.

Reflections on the Mysterious Beginnings and the Still Mysterious Present of Quantum Mechanics:

A Journey Into the Quantum Field

This Essay is available for download here:

Download PDF